If you are a creator and want to protect your art, photos, and images from Generative AI. This article will show you how to poison Generative AI crawlers and scrapers whenever they come after your work. This brand-new tool is the best way to stop Generative AI from stealing and copying your art and artistic design.

Table of Contents

Key Points

- Learn how to protect all of your digital art and images from Generative AI art crawlers and scrapers.

- Takes an offensive stance by subtly altering images at the pixel level, confusing AI models without significantly changing the visual appearance to the human eye.

- How to poison AI models that come after your artwork and content.

Stopping Generative AI Models From Stealing Your Content.

In order to prevent AI models from stealing your content you are going to have to employ two different tools that alter your content on a pixel level which essentially desquies the true look and style of your content. This junk image is then copied by the AI crawler, ultimately poisoning it.

TOOL 1: GLAZE - Defence

The first tool is called Glaze which is a defensive tool that disrupts style mimicry. To put it simply, Glaze works by understanding the AI models that are training on human art and using machine learning algorithms, to change artwork so that it appears unchanged to human eyes, but appears completely different to the AI. For example, you might have a picture of a bowl of fruit but the AI will see a pile of rocks.

There are quite a few different versions of Glaze available so get the one that works on your system. You might want to read ahead a little before choosing one as they do vary a little in performance.

Glaze 1.1.1, Windows GPU Version, for Windows 10/11.(2.7GB w/PyTorch GPU libraries included)

Glaze 1.1.1, Windows Standard Version (Non-GPU), for Windows 10/11

Glaze 1.1.1, MacOS - Apple Silicon, for Macs with M1/M2 processors

Glaze 1.1.1, MacOS - Intel, for Macs with Intel processors. Must be running MacOS 13.0 or higher

TOOL 2: NIGHTSHADE - Offense

The second tool is called Nightshade and works in a similar way to Glaze but is designed as an offensive tool rather than a defensive one. It distorts feature representations inside generative AI image models by optimizing and maximising visible changes to the original image at the pixel level.

There are quite a few different versions of Nightshade available so get the one that works on your system. You might want to read ahead a little before choosing one as they do vary a little in performance.

FAST link for Windows Nightshade v1.0

FAST link for Mac Nightshade v1.0

Nightshade 1.0, Windows GPU/CPU Version, for Windows 10/11 (2.6GB w/PyTorch GPU libraries included)

Nightshade 1.0, MacOS (CPU Only) - Apple Silicon, M1/M2/M3 processors (255MB)

Now that you have your Generative AI protection Arsenal Ready. Here's how to use it.

The next part of the process is using both of these tools to protect your content from AI theft. It takes a little bit of time and effort but the results are quite good. Depending on your results you may only want to use one of the tools. It's not the highest level of protection but it's better than nothing.

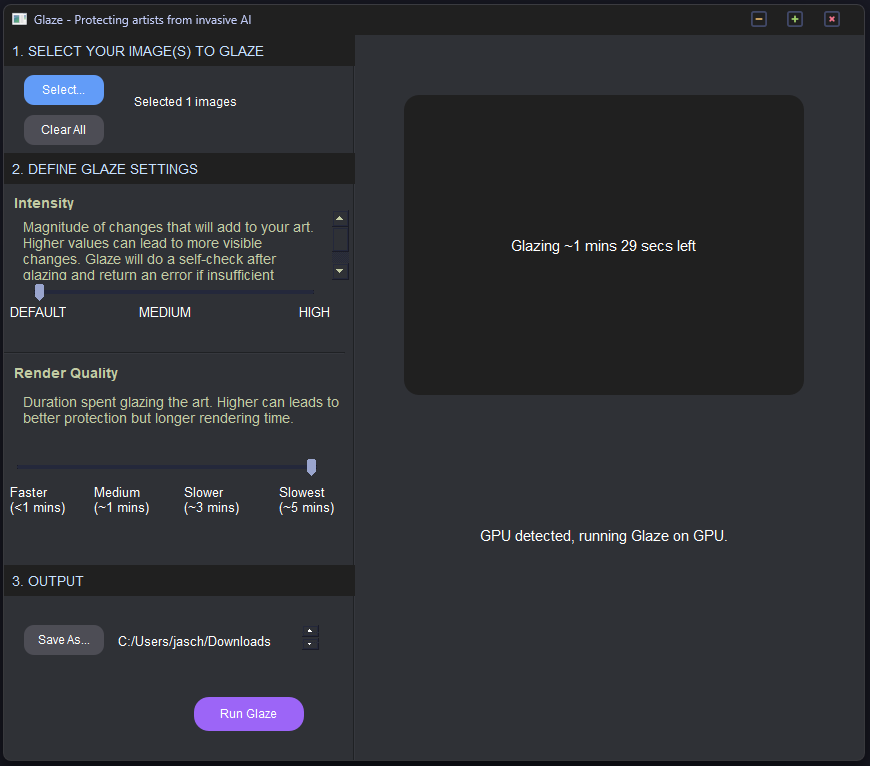

First, run your content through Glaze.

Upload Your Art

- Select your artwork by dragging it into the image placeholder or using the "Select..." button.

Set Glaze Parameters

- Intensity: Adjust the intensity of the "style cloak" for protection. Higher intensity provides stronger defence but may introduce more visible changes to your artwork. Choose "Low" or higher intensity, and consider variations for different distribution channels.

- Render Quality: Determine the compute time for optimal style cloak. Higher quality improves performance but extends processing time. GPUs, if available, significantly reduce processing time.

Preview or Run

- After selecting settings, preview the Glazed result to make intensity adjustments. Once satisfied, click Run to apply Glaze, saving the image(s) in your designated output directory with the same filename.

Note: Glaze protection effectiveness varies with art styles. Styles with smoother surfaces, like character design or animated art, may be more vulnerable. Use "Low" or higher intensity, and Glaze checks the effectiveness post-modification, warning if protection falls short. Depending on your original content you may have to experiment quite a bit to get the exact output file you want. Some settings can ruin your original image. But it's worth the time and effort to stop your content from being copied and replicated.

When you have an image that you are happy with the next part of the process is running the new image through Nightshade. This will apply another layer of protection and completely hide your content from Generative AI crawlers and scapers.

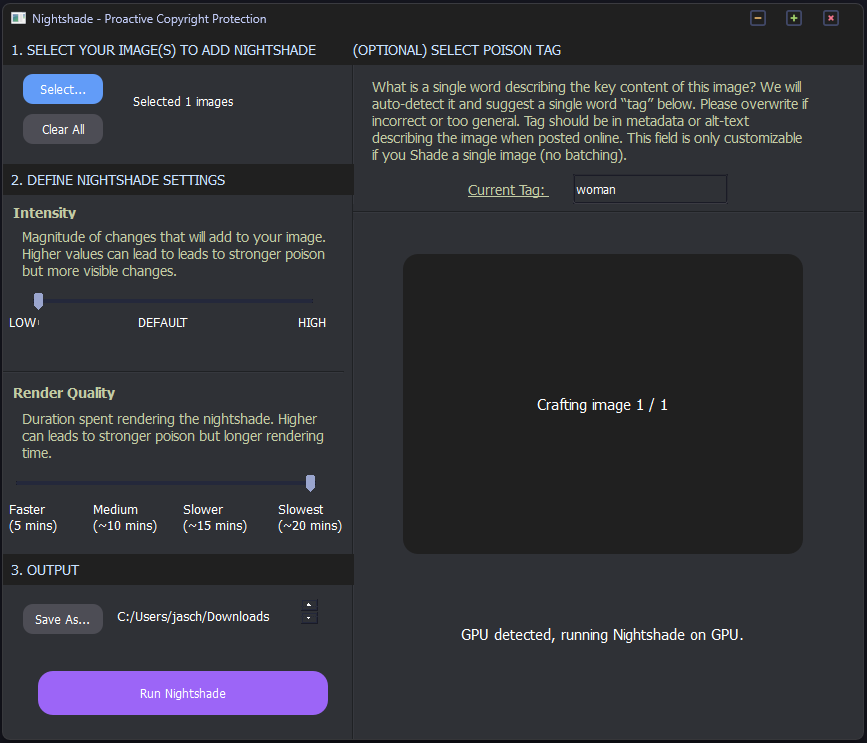

Now, run your content through Nightshade.

Upload Artwork: Select an image by dragging it into the placeholder or using the "Select..." button.

Set Parameters

- Intensity: Adjust poison intensity; higher values disrupt model associations more. But take longer to process

- Render Quality: Choose compute time for optimal poison; longer time leads to stronger effects. But take a lot longer to process.

Configure Output

- Choose the output directory for the Nightshaded image.

Assign Poison Tag

- Define the incorrect view for the AI model by selecting a concept; ensure the tag aligns with the image content.

Run Nightshade

Upload the image, confirm settings, and hit "Run" to initiate the process. The result will be saved in the chosen directory with the same filename.

Performance and Quality Tips.

- Running Nightshade in CPU mode may vary in speed based on available memory. Close other programs for optimal performance.

- Avoid marking Nightshaded images as such on social media to maintain its effectiveness as a poison attack.

Ways to Protect your Content From AI Companies that Train AI Models.

With Generative AI content becoming more and more popular and more and more controversial, everyone is looking for ways to protect their content from AI systems and artificial intelligence in general. Generative AIs like Midjourney, Stable Diffusion, Adobe Firefox, DALL-E, etc are basically just automated theft systems that take content without permission and break just about every copyright rule in the book but get away with it. So what exactly can you do about it? Well, watermarks don't work anymore and not a lot of other tools can prevent image generators that scrape and crawl content.

This is where Glaze and Nightshade come into play, both of these tools offer protection from image generation data collection. They allow anyone to protect their artwork from AI and all the scrapers and crawlers out there used to train AI models. We are at the point already where AI technology is being used to fight AI technology. So it's going to be interesting to see how this battle unfolds moving into the future. At least for now, we have some decent options on the table to help protect your work. While ai-generated content is cool it's not really in the best interest of anyone long term. Unless you are a massive company making bucket loads of easy money from other people's hard work...